Making My Interactive Memoji

Interactive Memoji for the Web

Memoji?

Introduced with iOS 12, Memoji is Apple's progression of the ubiquitous emoji. Set apart from its ancestors, Memoji are personalised caricatures, offering near infinite options for customisation.

Memoji's good looks haven't gone unrecognised, and many people use static, immobile Memoji as profile pictures, or as avatars on their website. I wanted to follow suit, but by opting for the static image, you really lose their sense of personality and liveliness. I didn't want to settle for this, and chose to explore the possibility of embedding dynamic and interactive Memoji instead.

The fact that they're fully rigged, and tightly integrated with iOS devices' built-in face tracking technology makes them really easy to create, and perfect for the majority who don't possess the artistic aptitude to use Blender for such tasks. This can look really great1 however, and should be the default first choice for anyone who's able to.

They're not without their flaws though; tightly integrated into Apple's own ecosystem, Memoji and the mechanisms to create them (ie AvatarKit) are not exposed outside of iMessage and FaceTime. Apple has a history of limiting the use of their emojis2, and I imagine the restrictions on Memoji stem from the same corporate delusion. They'll probably also tell you that using Memoji outside of their ecosystem is not allowed. Oops 🖕.

So, this is what we'll be creating:

The Plan of Attack

N.B. A lot of this post didn't initially work - so it's just here to document the journey.

✨. Skip ahead a bit if you're only interested in what did work.

Firstly let's set out what we're trying to achieve, then we'll visit each of them in turn:

- Find a way of creating a Memoji

- Get said Memoji into a useable format

- Insert Memoji into our website

- Allow users to interact with the Memoji

Like a record baby…

Creating the Memoji is probably the simplest of the steps, although there are a few things to note.

We're going to to use iMessage to create out Memoji. Importantly we want to create a video, not a Memoji sticker.

You want to record yourself rotating your head while keeping eye contact with the camera. The initial idea was to have the Memoji's gaze following your mouse, but due to the limitations of our method, we can't converge or diverge the eyes, nor face the head straight ahead. These issues ruin the effect if we go for a 'following the mouse' type effect, so it's better to go for a 'tilting the head' type effect instead.

Have a play with the finished product, and compare that with your actual head:

Imagine you're holding a hula hoop in front of you with both hands, and that it's positioned so your face is inline with the centre. Now tilt your head (not your eyes) so that you're looking at the top of the hoop. Follow the hoop around 360°: no problem, we're able to recreate this.

Now looking at the top of the hoop again, move straight down to the bottom of the hoop without following its curvature. And the same from left to right. Repeat the same with the finished Memoji above. Notice how in real life your head moves away from the outline of the imaginary hoop, but how in the Memoji the head doesn't tilt to look within the hoop. This is the limitation we're talking about.

It's kinda okay though, your mouse isn't normally over the Memoji, so it isn't too much of an issue.

Anyway, recording the video! Mimic what you see in the finished article. Start the video with your head slightly tilting up, and slowly rotate it around 360°.

You want to try and maintain a constant distance from the camera, as you want the first and last frame to be as close together as possible to hide the fact that the motion isn't actually continuous. This took many attempts, and while the recording I settled on isn't perfect, it's good enough - and I'm not rerecording it for the 48th time 😅.

N.B. You'll probably find that no matter how hard you try, the raw video will never loop due to the 'reset' animation added at the end.

🔁 Pause for a second at the end of the recording and then trim the video to achieve the loop. It'll still take a few tries.

Channel Your Inner Alpha!

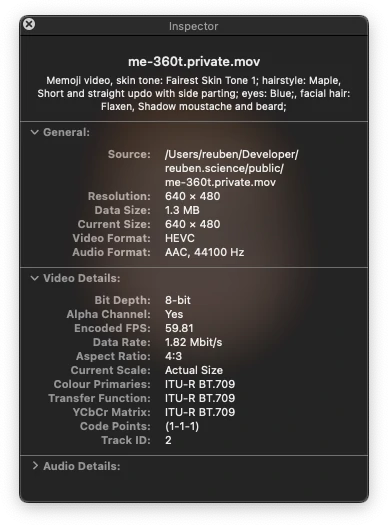

Apple doesn't expose the Memoji in any sort of 'raw' or 3D format. Instead, all we've got is a video. A .mov. Straight out of my iPhone 11, across iMessage, and into trusty FFmpeg, we can see some quite detailed information about the file.

Input #0, mov,mp4,m4a,3gp,3g2,mj2, from './me-360t.mov':

Metadata:

major_brand : qt

minor_version : 0

compatible_brands: qt

creation_time : 2020-11-24T18:30:38.000000Z

com.apple.quicktime.description: Memoji video, skin tone: Fairest Skin Tone 1; hairstyle: Maple, Short and straight updo with side parting; eyes: Blue;, facial hair: Flaxen, Shadow moustache and beard;

Duration: 00:00:05.25, start: 0.000000, bitrate: 1992 kb/s

Stream #0:0(und): Audio: aac (LC) (mp4a / 0x6134706D), 44100 Hz, mono, fltp, 63 kb/s (default)

Metadata:

creation_time : 2020-11-24T18:30:38.000000Z

handler_name : Core Media Audio

Stream #0:1(und): Video: hevc (Main) (hvc1 / 0x31637668), yuv420p(tv, bt709), 640x480, 1753 kb/s, 57.56 fps, 60 tbr, 600 tbn, 600 tbc (default)

Metadata:

creation_time : 2020-11-24T18:30:38.000000Z

handler_name : Core Media Video

encoder : HEVC

Firstly we can see that we're dealing with a .mov container holding HEVC aka H.265 encoded data. This is good news: a modern-ish format that supports alpha channels. Interestingly Apple also embeds plenty of metadata about the Memoji in the file. Useful for an accessibility description maybe 😯!

com.apple.quicktime.description:

Memoji video,

skin tone: Fairest Skin Tone 1;

hairstyle: Maple, Short and straight updo with side parting;

eyes: Blue;

,facial hair: Flaxen, Shadow moustache and beard;

The file format supports alpha channels! Great! Would Apple be so good as to make sure of it? Opening the file in QuickTime we can see that there is indeed an alpha channel - and its being used 🎉!

While the file has an alpha channel, I couldn't get FFmpeg to acknowledge it. Looking in the output above we only see a pixel format of yuv420p listed. It seems that as of now, FFmpeg's libx265 codec doesn't support H.265 with yuva420p pixel data3 - the key difference between yuva420p and yuv420p being the a, for alpha.

My initial idea was to use FFmpeg to transcode the H.265 into VP8/VP9 given its less than stellar cross compatibility4, then I'd have support for all major browsers using both formats combined5.

While I was able to convert the .mov to .webm, due to FFmpeg's lack of yuva420p support I was loosing the alpha channel - which we're trying to preserve 🙃.

Changing Tact

Rereading the FFmpeg output, I noticed the handler_name was Core Media Video. That's a very 'Apple' name, and indeed it's the name of Apple's shared macOS/iOS media framework. Given that QuickTime reports it, iOS generated it, and Preview shows it, I guessed there was a fair chance that tools I'd need to make use of the yuva420p alpha channel were already built in to macOS. If I could use these, then I could export the frames, and reencode them to webm using FFmpeg.

Again with Apple, this turned out to be an issue of exposing the means to the end user. QuickTime 7 used to offer the export to image sequence functionality6, but sometime between versions 7 and 10.6 the option was canned. QuickTime 7 isn't supported after macOS Mojave7 😢. iMovie also lacks the capability. I can confirm that Final Cut Pro X does have it though!

I don't really want to buy FCP X, and while it's only one click away on many-a-site 👀…it's a bit overkill for such a small task.

uPVC

Given that the Core Media framework is built in to macOS, I decided the path of least resistance was probably to just write a quick CLI tool to extract the frames using AVFoundation (which uses Core Media under the hood). And that's exactly what I did:

...

let trackReaderOutput = AVAssetReaderTrackOutput(track: videoTrack, outputSettings:[String(kCVPixelBufferPixelFormatTypeKey): NSNumber(value: kCVPixelFormatType_32BGRA)])

...

while let sampleBuffer = trackReaderOutput.copyNextSampleBuffer() {

if let imageBuffer = CMSampleBufferGetImageBuffer(sampleBuffer) {

let ciimage: CIImage = CIImage(cvPixelBuffer: imageBuffer)

if let colorSpace = CGColorSpace(name: CGColorSpace.sRGB) {

let format = CIFormat.RGBA16 // 16-bit RGBA

let quality = 1.0 // 1.0 = lossless

...

try context.writePNGRepresentation(of: ciimage, to: outURL, format: format, colorSpace: colorSpace, options: [kCGImageDestinationLossyCompressionQuality as CIImageRepresentationOption: quality])

}

}

frameIndex += 1

}

...

Written in Swift, it just iterates over the frames of a video file (which could theoretically be any video format supported by AVFoundation), and exports 16-bit RGBA lossless PNGs. I'm not a Swift native, so the main logic was copied adapted 👌 from Stack Overflow8. I also ended up adding the options to export every n % x === 0-th frame: for reasons I'll come on to later 😏.

Return of the FFmpeg!

Armed with our sequence of image files, we're ready to merge them back into a video using FFmpeg.

ffmpeg -framerate 60 -i ./frame-%0d.png -c:v libvpx-vp9 -pix_fmt yuva420p -speed 0 -crf 16 -an ./me.webm

FFmpeg does support yuva420p with webm, so we're good, and the file size isn't terrible - at around 1MB.

Rough as a Bear's Arse

All that's left now is to embed the video, and hookup mousemove event handlers to scrub through the video. Surely.

In Safari this works fine - buttery smooth - Chrome however really chokes on the video. I tried lowering the quality via the -crf parameter in FFmpeg, but no matter how low the quality, the FPS, or how much event handler throttling I applied, Chrome still jittered and stalled its way through the animation. The same applied for VP8.

Given that Safari has no trouble I wonder if this is an issue of the HTML5 video element's scrubbing not being optimised for animation via .currentTime in Blink-based browsers. I also tried this in combination with window.requestAnimationFrame to no avail.

Another difference between the browsers was that Safari was consuming the .mov file, while Chrome was using the .webm file. They both have similar file sizes, but very different compression algorithms. Regardless, most compressed video cannot be played back in reverse, or be seeked(sic) at realtime speeds.

As an analogy, most video compression works a bit like a zip (no pun intended):

- 🦷 Teeth of the zip represent video frames

- 👍 You can unzip your coat in one direction, and it works fine.

- 👎 You can't unzip your coat in reverse - or skip bits out - leaving them zipped together.

- 🤐 Picking a random point on the zip, all preceding zip must be undone in order to reach it.

Keeping it simple - in video you have I frames, and P frames9.I frames are like the frames that we extracted using uPVC - whole images in their own right. P frames are not bona fide images. Instead they contain references to previous I and P frames, specifying elements they have in common to save space, and how they differ.

- To decode an

I frameyou just need that frame 👌. - To decode a

P framehowever you also need all preceding frames that it references, and all preceding frames that all those frames reference … all the way back to the closestI frame10.

Scrubbing through the video (seeking in response to mouse movements) would require the decoder to jump around 'the zip' and necessitate 'unzipping' a lot of previous (which is ahead if playing in reverse) frames / footage. Perhaps this aspect of video encoding is where some of the issues arose.

This doesn't fully explain the problems as there wasn't such an issue with H.265 in Safari - but it most probably contributed. Perhaps Chrome didn't buffer the uncompressed video into memory, while Safari did 🤷♀️.

Abort, Abort

Given that the video method wasn't going to work, I had to think of an alternative. If we run with the idea of video simply being a series of frames, it's not a crazy idea to skip the whole 'video' encoding malarkey and just deal with frames directly.

We've already done the hard work of extracting the frames, and have a folder of .png files.

I wrote a small React component to listen for global mousemove event, and display the corresponding frame. This works great, and surprisingly is a lot smoother than the video! I'm not going to go over the ins and outs of it here, but I will point out a few things of note.

Firstly the interactivity is a bonus, and in order to not get in the way of more pressing resources, we load the frames via a useEffect hook. We load a sensible defaultFrame first and that serves as a placeholder until the rest of them have loaded. We listen for the .decode() Promise on the defaultFrame Image to signal that it's loaded and then animate it in.

Downloading lots of (smallish) image files isn't a problem for people on fast internet connections, but consideration has to be taken for those on slower connections. The React component allows about 5sec for the images to download before cancelling all but the defaultFrame request. This allows any other more important content to come through faster, and gracefully falls back to a static Memoji.

At this point we still don't have interactivity. That only occurs once all the frames have downloaded - to stop jitter in the first few moments. In testing it was quite common to stop moving the mouse just before the point at which the frames became available. When the user then resumes mouse movement there's a jump between the defaultFrame placeholder and the required frame.

To overcome this, we listen for the user's mouse movement even before the frames have downloaded. There isn't the option to directly query the user agent for the mouse position, so we rely on recording these preemptive events to get it - and then smoothly transition to the corresponding frame. It's better to transition when the mouse isn't moving than when it is.

This works fine on traditional mouse and keyboard devices, but - for obvious reasons - works a little differently on touchscreens. We listen for the onTouch counterparts, but also animate the Memoji by default - as to not rely on the user touching the Memoji. Once they have, touch interaction takes over. As a next step I'd like to overlay a call-to-action to highlight this to touch users, and make the animation a little more natural.

A Little on the Large Side

Video compression may have hindered our dreams of smooth scrubbing, but it's used for a reason. The 315 frames only took up around 1MB. Extracted in their raw .png form they occupy a staggering 65.4MB. Ouch 😳. Admittedly .png probably isn't the most efficient format for storing high fidelity graphics, but it is lossless, and does support alpha channels: fine for an intermediate format.

Firstly I saved 2/3 off the total size by only keeping every 3rd frame. While perhaps counterintuitive, this didn't affect the smoothness of the final result in any perceivable way.

ImageOptim managed to reduce the size down further by 47%. But that's still not anywhere near where we need it to be.

I settled on .webp. Support is good11, and I set about converting the .png files. The cwebp tool12 is the way to go, and .webp itself has a multitude of options to when encoding the image.

for file in *.png; do cwebp -lossless -mt "./$file" -o "./${file//\.png/.webp}"; done

It's a really neat format and supports both lossless, and lossy compression. It even has a middle ground near_lossless option. Even with lossless compression, it managed to reduce the total burden by a massive 93% to only 4.6MB! While really impressive, this is still a bit hefty, as I was hoping to dip into the KB range.

After going through many permutations, I settled on a lossy quality of 80. Going lower reduced the image quality, but didn't justify the cost, giving only negligible improvements in file size. I also used cwebp's builtin crop function to try and shave off every last byte.

for file in *.png; do cwebp -q 80 -mt -crop 100 26 459 350 "./$file" -o "./${file//\.png/.webp}"; done

After running this, and cooking an egg on my CPU 🍳, I was left with a total size of 868KB. Pretty fucking good 👌. A combined saving of just under 99%!

.webp itself is based on the VP8 video codec that we wanted to use initially. Given this, I guess the savings aren't that surprising, and basically means that we've come full circle, just without the limitations of video compression.

There are some avenues to explore to try and reduce the total file size some more. .webp supports animation, but I don't think the ability to scrub through animated images is exposed in the web environment at the present time, and it may well suffer from the same issues as VP8/VP9. Maybe serving the frames as an animated .webp file and then decoding them to canvas is an option - but I am yet to explore the possibility.

A simpler option might be to combine the frames into a sprite sheet.

montage ./*.webp -define webp:lossless=true -geometry '1x1+0+0<' -tile 10x -background none ./output.webp

cwebp -q 80 ./output.webp -o ./compressed.webp

I've had a play using ImageMagick's montage, and I've only been able to reduce the total size to about 715KB after lossy compression. I still need to test if this meagre compression benefit outweighs the speed benefits that we achieve by serving individual frames concurrently over HTTP2.

Are we nearly there yet?

While what we have works, it still has a number of caveats, chiefly the 868KB file size burden, and the limited axises the Memoji is able to move in. I'd still like to reduce the former, and to summarise, the avenues I'm currently exploring are:

- Animated

.webpframes decoded tocanvas - Sprite sheets

- Load reduced quality frames for movement, and download higher quality once movement has stopped

- Only load frames that surround cursor's starting position

.avifonce support is good enough

The latter is more difficult, and would require a completely new approach. While the AvatarKit framework isn't officially exposed, people have managed to use it on side loaded apps13. The 3D models are also stored at a known location14, extracting them shouldn't be difficult, but I have very little knowledge of how to composite the features into 'me', nor do I know how animating them would work. If this were to be possible, then it would solve the current limitations on movement - and - via WebGL the issues with file size.

- https://www.joshwcomeau.com/ ↩

- https://twitter.com/Sam0711er/status/959535639174746112 ↩

- https://trac.ffmpeg.org/ticket/7965 ↩

- https://caniuse.com/hevc ↩

- https://caniuse.com/webm ↩

- https://video.stackexchange.com/questions/4033/exporting-mov-vr-to-multiple-still-frames ↩

- https://support.apple.com/kb/DL923?locale=en_GB ↩

- https://stackoverflow.com/questions/39570745/accesing-individual-frames-using-av-player/39573702#39573702 ↩

- https://en.m.wikipedia.org/wiki/Video_compression_picture_types ↩

- https://github.com/google/ExoPlayer/issues/2191#issuecomment-266783472 ↩

- https://caniuse.com/webp ↩

- https://developers.google.com/speed/webp/docs/cwebp ↩

- https://github.com/simonbs/SBSAnimoji ↩

- https://www.reddit.com/r/jailbreak/comments/j2rxd5/question_is_there_a_way_to_ripexport/ ↩